Description

The database consists of image sequences of 86 subjects performing facial expressions. The subjects were sitting in a chair in front of one camera. The background was a blue screen. Two light sources of 300W each, mounted on stands at a height of 130cm approximately were used. On each stand one umbrella was fixed in order to diffuse light and avoid shadows. The camera was able to capture images at a rate of 19 frames per second. Each image was saved with a jpg format, 896×896 pixels and a size ranging from 240 to 340 KB.

In the database participated 35 women and 51 men all of Caucasian origin between 20 and 35 years of age. Men are with or without beards. The subjects are not wearing glasses except for 7 subjects in the second part of the database. There are no occlusions except for a few hair falling on the face.

The images of 52 subjects are available to authorized internet users. The data that can be accessed amounts to 38GB. Twenty five subjects are available upon request and the rest 9 subjects are available only in the MUG laboratory.

There are two parts in the database. In the first part the subjects were asked to perform the six basic expressions, which are anger, disgust, fear, happiness, sadness, surprise. The second part contains laboratory induced emotions.

In the first part the goal was firstly to imitate correctly the basic expressions and secondly to obtain sufficient material. To accomplish the first goal, prior to the recordings, a short tutorial about the basic emotions was given to the subjects. The subjects were informed about how the six facial expressions are performed according to the ’emotion prototypes’ as defined in the Investigator’s Guide in the FACS manual. The aim was to avoid erroneous expressions, that is expressions that do not actually correspond to their label. After the subjects had learned the different ways that the six expressions are performed, they freely chose to imitate one of them. For example fear can be displayed either with the lips stretched (AU 20) or with the jaw drop (AU 26) or with the mouth closed and only moving the upper part of the face. The image sequences start and end at neutral state and follow the onset, apex, offset temporal pattern.

For each one of the six expressions we saved a few image sequences (usually three to five) of various lengths. Each sequence contains 50 to 160 images. The best image sequences were selected to become available. In addition, for each subject a short image sequence depicting the neutral state was recorded. The sequences with correct imitation of the expressions were selected to be part of the database. We created for each image sequence a video in avi format which helps to have a quick look at the expression. The number of the available sequences counts up to 1462.

The sequences are categorically labeled. In spite that they rely on EMFACS, they are not FACS coded yet. Moreover, 80 landmark facial points were manually annotated on several images. The landmark point annotation for 401 images of 26 subjects is publicly available. In addition to manual annotation, landmark points were automatically computed using Active Appearance Models. We build a three-level AAM model using the manually annotated landmark points and their images in training. There are 821 images of 28 subjects automatically annotated available to researchers.

In the second part of the database, the subjects were recorded while they were watching a video that was created in order to induce emotions. The subjects were sitting in a chair similar to the first part and they were aware that they were being recorded. The goal of this part of the database was to elicit authentic expressions in the laboratory environment in contrast to the posed ones. The subjects display various emotions and emotional attitudes. These include (apart from the six basic emotions) but are not limited to: annoyance, amusement, distraction, friendliness, sympathy, interest and excitement. There is one image sequence for each subject captured at 19 fps and at a resolution of 896×896. Each sequence contains more than 1000 images. These image sequences are not labeled yet.

More details can be found in the following paper: N. Aifanti, C. Papachristou and A. Delopoulos, The MUG Facial Expression Database, in Proc. 11th Int. Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS), Desenzano, Italy, April 12-14 2010.

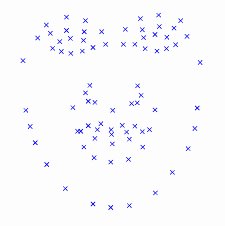

Examples

How to obtain the dataset

The MUG database is publically available for non-commercial use. To obtain access to the dataset, read the MUG database License Agreement, sign a printed copy of the agreement, and send a scanned copy to (adelo ( at ) eng.auth.gr) with:

Subject: MUG Facial Expression Database download requestPlease create an account that will allow me to download the MUG Facial Expression Database. I will abide by the Release Agreement version 1.

Then you will receive a username/password and detailed instructions. The reply email will not be send in real-time, but should arrive within one or two business days, under normal circumstances. Accounts will be deleted after a considerable amount of time. Please be careful, we only accept requests from academic email-addresses. Any requests from free email addresses will be refused. Distribution by mailing DVDs is possible only for the 25 subjects that are not available via the internet.

Some specifications for the structure of the database are provided after logging in for download. Any comments are welcome and please inform us for any difficulties while downloading or using the database. This site will inform the readers when the database will be updated.

Acknowledgements

We would like to thank the subjects that participated in the database. We would also like to thank the administrators and the people in the laboratory that helped in recordings.